Introduction

If you're a member of this or any other technology-based forum, odds are that you've noticed the several versions of Microsoft's latest offering, Windows Vista. If you haven't, well... please come out from under that rock and get with the programming!One of the biggest changes has been the clear offering and even a gentle push towards the 64-bit version of the OS. Indubitably, this extra option becomes fodder for forum discussion, usually along the line of:

Forumite 1: "Hi, I am building a new system and I wanted to know what your thoughts were on whether I should use 64-bit or 32-bit Vista? I've heard varying things around the net regarding compatibility, and was hoping someone could help."

Forumite 2: "Hi! I just read your post. You should definitely go with the 32-bit version. There's tons of compatibility problems with 64b (Just look at XP-64), and it's going to die a long, drawn-out death. Besides, the only actual difference between them is that 64-bit can make proper use of 4GB of RAM."

Forumite 1: "Oh, ok! Thanks!"

Now, what's wrong with this picture? The answer is a lot. Time and time again, self-proclaimed gurus determine that the only real difference between 32-bit computing and 64-bit computing is the memory limit. Are they right that RAM is a reason? Definitely - but that's missing about 99 percent of the true differences. By that logic, the only major difference between your old 8-bit Nintendo console and your Xbox 360 is processor speed. I think we can all agree, that's just wrong.

So, if memory is only one of the myriad of changes to 64-bit computing, what does it actually do? And how? More importantly, why do we even care? We'll get to each of these questions in turn; but first, let's get some definitions straight and take a little trip down memory lane.

The 64 million dollar question

So, what is all this 64-bit stuff, anyway? Well, for a moment we'll keep this relatively simple, as some much deeper definitions will follow on the next page. For now, if you're not aware, 64-bit deals with how your computer works with the data it is given, called words.Words (which are composed of a piece of data, instructions to manipulate that data or both) are the key to computing. As such, the size of word that a computer's system allows can greatly alter how quickly it can process data and how accurate that data is - the larger the word, the more information that can be passed through a processor in every clock cycle, or the more transformations that can be performed on that data.

The concept of words is a tricky thing that we'll get into much deeper in the next page, but for now what's important to know is that 64-bit computers can theoretically process double the information per cycle. However, that is very different than running twice as fast!

It's all about the Pentiums...or not

Though 64-bit computing seems like a relatively new invention, it's actually been around since long before the desktop computer. The first proper 64-bit system was actually IBM's 7030 Stretch computer, in 1961. Since that time, 64-bit computing has enjoyed a niche but fruitful life in high-end server setups, processing setups, and super-computers.It took many years for 64-bit computing to filter down to even common server levels. In 1994, Intel announced its first plans to move to 64-bit on its servers by joint venture with HP. Less than a year later, Sun released the SPARC 64-bit native systems for enterprise-level workstations. It seemed like things were finally turning to 64-bit.

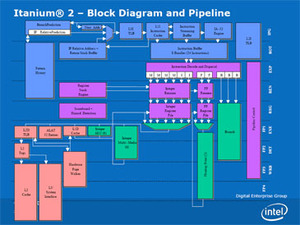

It would be another six years before the launch of Intel's first offering in 2001. The Itanium line, affectionately dubbed the "Itanic" due to its commercial flop, was the culmination of its research started in 1994 with HP. However, it was still targeted at the server line, leaving desktop users out in the cold. Finally, 64-bit hit the desktop market with AMD's launch of the Opterons and Athlon 64 chips in 2003. Though these chips were not based on a "pure" 64-bit architecture (as they had to maintain compatibility with existing operating systems and software), they could use an expansive group of 64-bit instructions known as x86-64.

The problem is, no matter whether it's pure 64-bit or x86-64, nothing will work without an operating system that can make use of it. And aside from 'nix operating systems, nothing that the average consumer used supported true 64-bit computing until Microsoft's lacklustre attempt at re-doing Windows XP in 2005. Contrary to popular belief, OS X did not support native 64-bit extensions until Tiger, as the PowerPC processors used in Macs up to and including the G5 were not actual 64-bit processors, though the G5 could use 64-bit instructions.

But before we get too far into that, let's take a look at some of the more basic ideas behind 64-bit.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.